Objectives of the Product

constellr, a European leader in thermal intelligence and satellite technology, provides unparalleled insights into Earth’s resources and land use through proprietary space-based infrared detection. Using high-precision Land Surface Temperature (LST) data, the key objective of constellr’s product is to empower stakeholders with real-time thermal insights to proactively address challenges in agriculture, urban planning, and infrastructure management.

Temperature is a critical variable for understanding stresses and anomalies in ecosystems, urban zones, and infrastructure. By providing early detection of issues such as crop stress, urban heat patterns, and infrastructure vulnerabilities, constellr’s thermal intelligence enables precise interventions to mitigate risks and optimise outcomes. These actionable insights support yield predictions, policy development, and supply chain optimisation, ensuring smarter, data-driven decision-making.

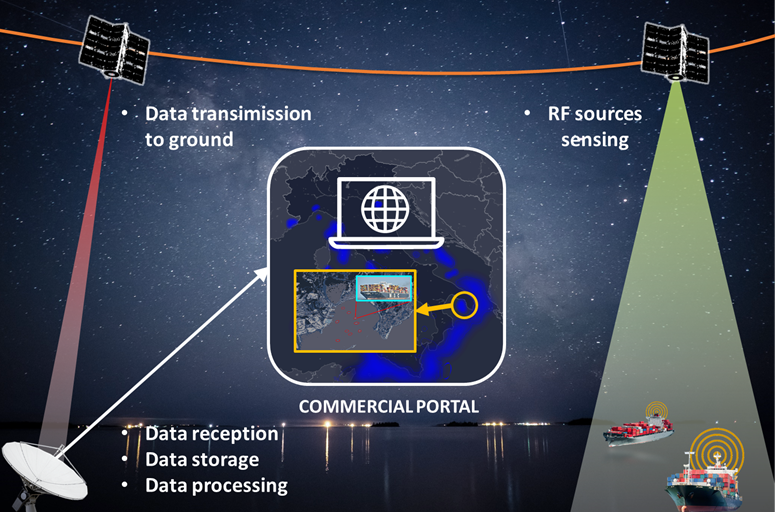

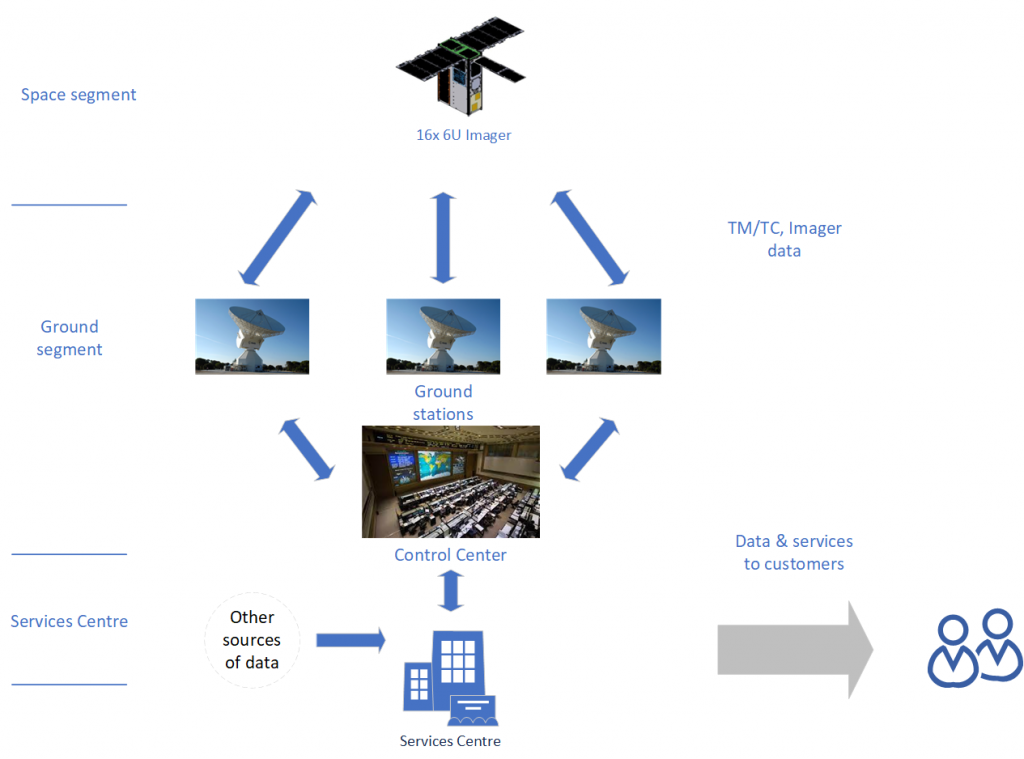

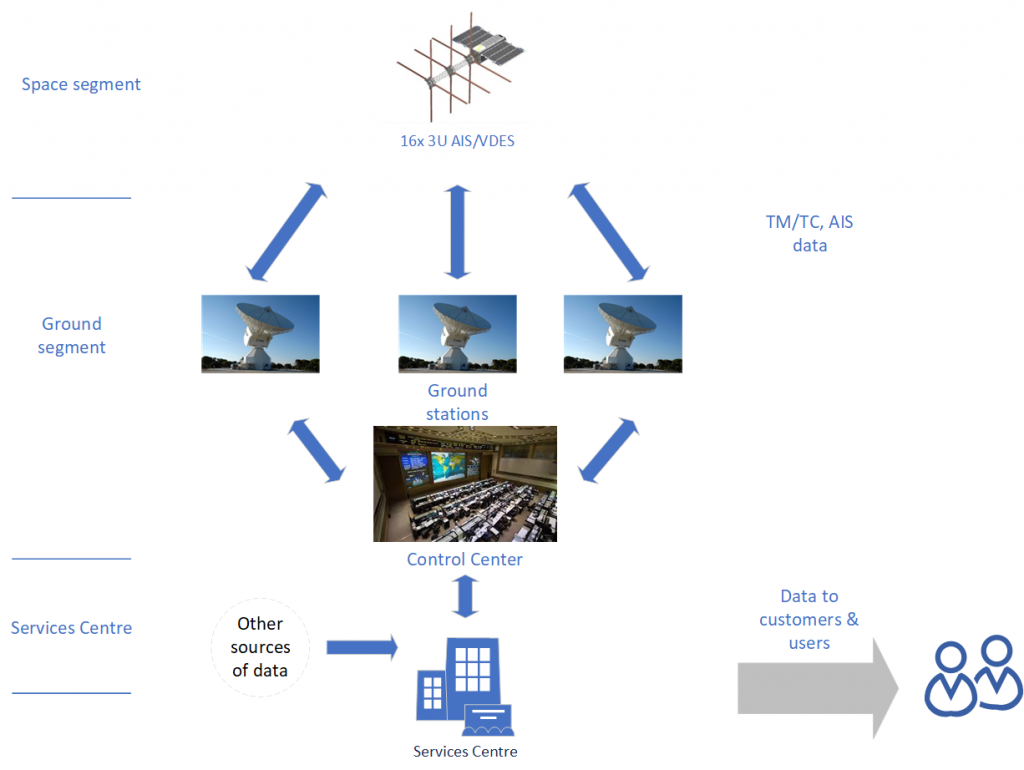

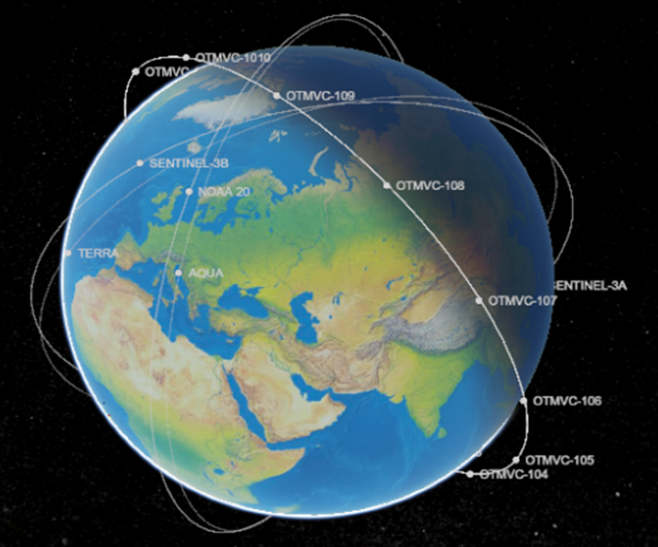

To achieve this, constellr employs hyper-scalable cloud infrastructure and a global network of ground stations. This advanced delivery system minimises latency between satellite measurements and actionable data, providing users with near-real-time access to critical information.

By harnessing thermal intelligence, constellr aims to revolutionise how industries manage resources, ensuring sustainability, resilience, and efficiency across the globe.

Customers and their Needs

constellr serves customers across agriculture, urban planning, infrastructure management, and sustainability, addressing critical challenges with precise thermal intelligence data.

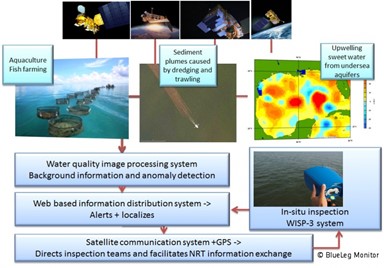

In agriculture, agribusinesses use constellr’s real-time thermal intelligence atlas to monitor crop health, soil moisture, and early signs of stress. By enabling targeted irrigation and proactive measures, constellr helps optimise water use, reduce waste, and improve yields—key to sustainably feeding a global population projected to reach 10 billion by 2050.

Urban planners rely on constellr’s data to identify urban heat islands and develop cooling strategies, such as adding vegetation or improving materials, to create more sustainable and climate-resilient cities. The data also informs infrastructure designs that adapt to local microclimates, reducing reliance on energy-intensive cooling systems.

For the infrastructure industry, constellr’s thermal monitoring identifies vulnerabilities in transport networks during extreme weather, enabling preventative maintenance and reducing costly emergency repairs.

In sustainability, policymakers and conservation agencies depend on constellr’s insights to monitor carbon sinks, track offset projects, and regulate emissions. This data ensures accountability in climate action and supports global efforts to meet carbon reduction goals.

constellr empowers stakeholders across sectors with actionable insights to address resource challenges, build resilience, and drive sustainable practices.

Targeted customer/users countries

Pan-Europe: smart farming stakeholders for whom data and farm management information systems are the norm (Germany, Netherlands, France, Spain, Turkey, etc.). Beyond Europe, the key global agricultural production areas include, but are not limited to, the USA, South America, the Middle East (Israel), Asia (Turkey) and eventually Sub-Saharan Africa.

Product description

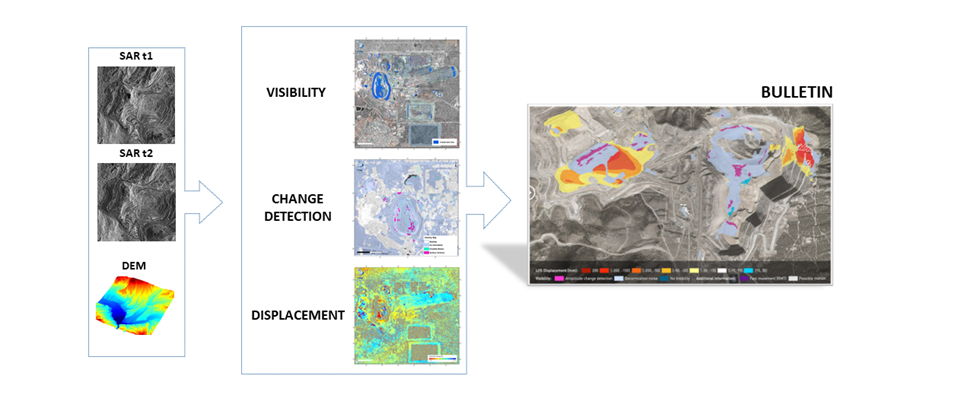

constellr’s real-time global thermal intelligence atlas revolutionises sustainable management by leveraging high-precision Land Surface Temperature (LST) data. Acting as a “digital twin” of Earth, it delivers actionable insights into vegetation, urban zones, and infrastructure, enabling better prediction and management of natural and human systems through accessible, biophysical data-driven solutions.

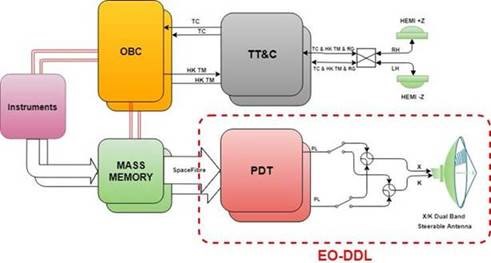

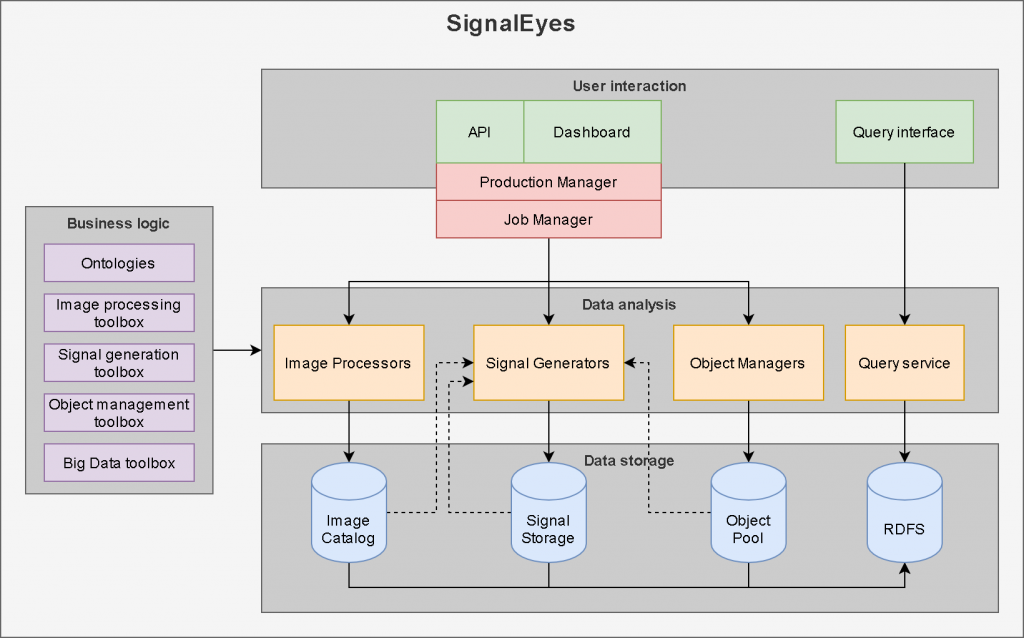

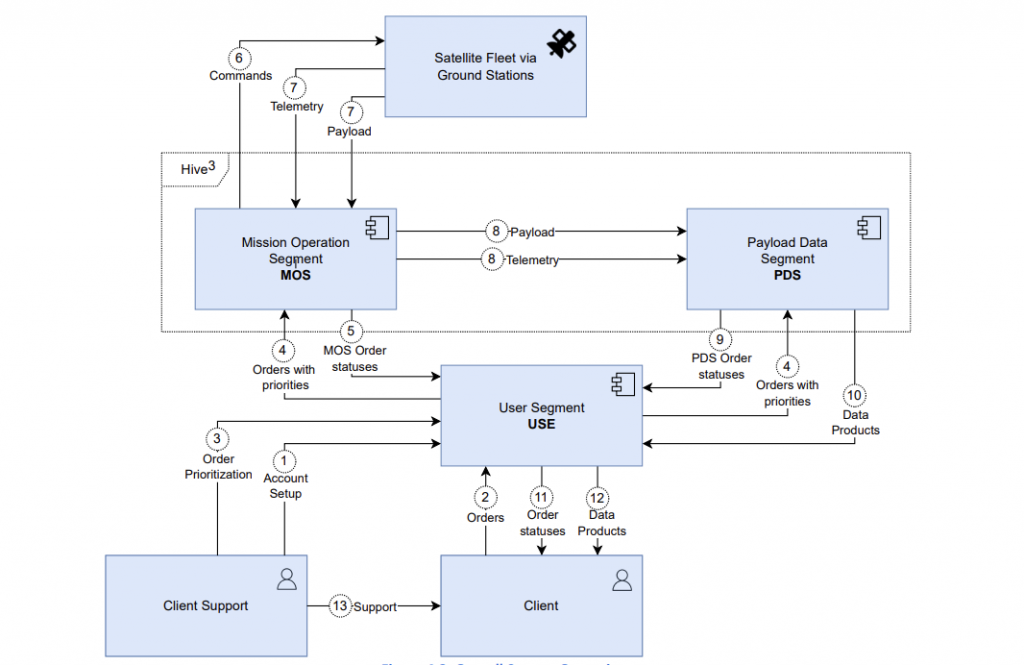

constellr’s customers can subscribe to data for their AOI, with cost being a function of area size, revisit frequency, and timeliness (latency). Recorded data in the thermal infrared and VNIR spectral regions are downlinked, processed to Level-2 Land-Surface Temperature, and then delivered via API to the ordering customer.

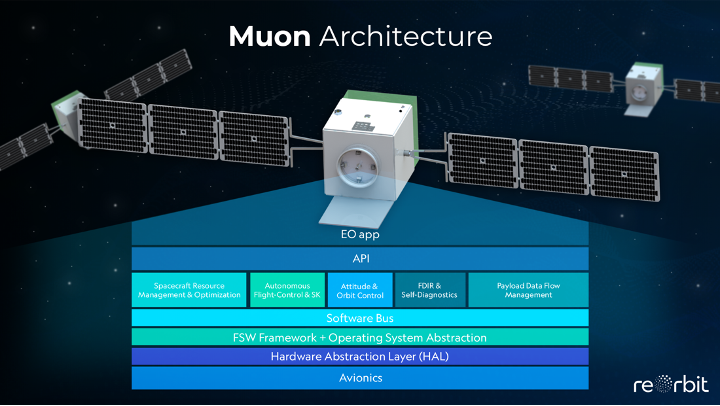

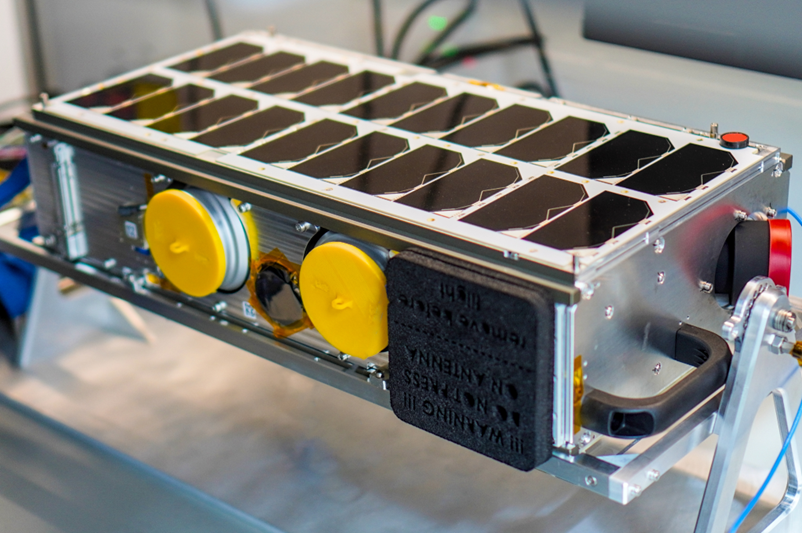

constellr’s TIR payload uses proprietary design elements in cryocooling sensors, high-performance thermal systems and optics to miniaturise traditional TIR sensor payloads to the size of more agile microsatellites. Novel operational concepts such as “virtual calibration” (VCAL), reduce space segment costs massively. Our data distribution platform further leverages the cost benefits of hyperscalable cloud technology to distribute our critical data to a global customer base.

Added Value

Accessing high-quality geospatial data is often challenging due to high costs, complexity requiring specialised expertise, inconsistent availability that limits trend mapping, and low resolution, which restricts precision applications like targeted irrigation.

A new approach to thermal measurement addresses these barriers through space-borne sensors that deliver high-precision temperature data. Unlike traditional methods such as drones or planes, this technology provides continuously updated data, enabling near real-time trend mapping and rapid responses to temperature changes. This makes it particularly valuable for climate adaptation, water resource management, and sustainable agriculture.

The system is also cost-effective compared to traditional terrestrial monitoring methods. By combining orbital data with ground-based sources and presenting it in an accessible format, the solution reduces both financial and technical barriers, making advanced geospatial insights more widely available.

This approach offers a practical and scalable way to monitor and manage environmental and infrastructure systems, providing the actionable insights needed to address global challenges and improve resource efficiency.

Current Status

The de-risking activity kicked-off in April 2022. The proto-flight model (PFM1) manufacturing is completed and has completed its final environmental and functional tests. Launch is planned for the first quarter of 2025 via the Falcon 9 Transporter 12 mission.